init

Browse files- README.md +77 -0

- added_tokens.json +1 -0

- config.json +162 -0

- preprocessor_config.json +21 -0

- pytorch_model.bin +3 -0

- special_tokens_map.json +1 -0

- tokenizer_config.json +1 -0

- vocab.json +0 -0

README.md

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: en

|

| 3 |

+

datasets:

|

| 4 |

+

- librispeech_asr

|

| 5 |

+

- common_voice

|

| 6 |

+

tags:

|

| 7 |

+

- speech

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# M-CTC-T

|

| 12 |

+

|

| 13 |

+

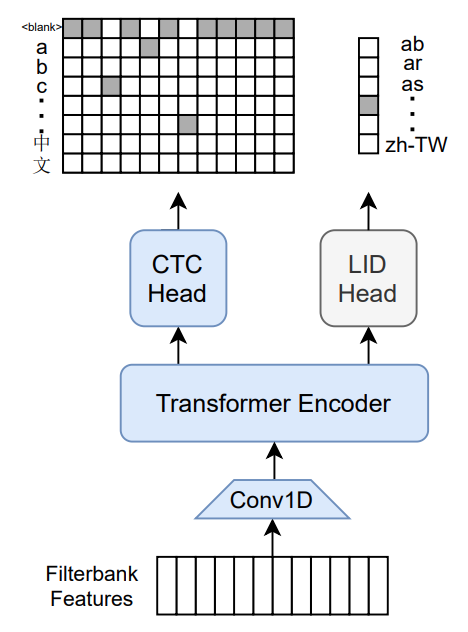

Massively multilingual speech recognizer from Meta AI. The model is a 1B-param transformer encoder, with a CTC head over 8065 character labels and a language identification head over 60 language ID labels. It is trained on Common Voice (version 6.1, December 2020 release) and VoxPopuli. After training on Common Voice and VoxPopuli, the model is trained on Common Voice only. The labels are unnormalized character-level transcripts (punctuation and capitalization are not removed). The model takes as input Mel filterbank features from a 16Khz audio signal.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

The original Flashlight code, model checkpoints, and Colab notebook can be found at https://github.com/flashlight/wav2letter/tree/main/recipes/mling_pl .

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

## Citation

|

| 21 |

+

|

| 22 |

+

[Paper](https://arxiv.org/abs/2111.00161)

|

| 23 |

+

|

| 24 |

+

Authors: Loren Lugosch, Tatiana Likhomanenko, Gabriel Synnaeve, Ronan Collobert

|

| 25 |

+

|

| 26 |

+

```

|

| 27 |

+

@article{lugosch2021pseudo,

|

| 28 |

+

title={Pseudo-Labeling for Massively Multilingual Speech Recognition},

|

| 29 |

+

author={Lugosch, Loren and Likhomanenko, Tatiana and Synnaeve, Gabriel and Collobert, Ronan},

|

| 30 |

+

journal={ICASSP},

|

| 31 |

+

year={2022}

|

| 32 |

+

}

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

Additional thanks to [Chan Woo Kim](https://huggingface.co/cwkeam) and [Patrick von Platen](https://huggingface.co/patrickvonplaten) for porting the model from Flashlight to PyTorch.

|

| 36 |

+

|

| 37 |

+

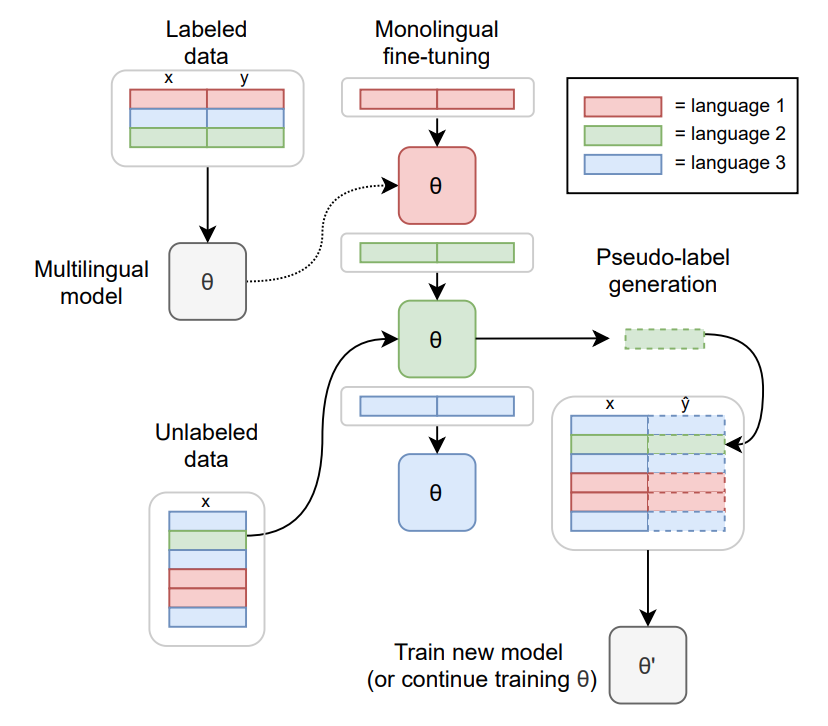

# Training method

|

| 38 |

+

|

| 39 |

+

TO-DO: replace with the training diagram from paper

|

| 40 |

+

|

| 41 |

+

For more information on how the model was trained, please take a look at the [official paper](https://arxiv.org/abs/2111.00161).

|

| 42 |

+

|

| 43 |

+

# Usage

|

| 44 |

+

|

| 45 |

+

To transcribe audio files the model can be used as a standalone acoustic model as follows:

|

| 46 |

+

|

| 47 |

+

```python

|

| 48 |

+

import torch

|

| 49 |

+

import torchaudio

|

| 50 |

+

from datasets import load_dataset

|

| 51 |

+

from transformers import MCTCTForCTC, MCTCTProcessor

|

| 52 |

+

|

| 53 |

+

model = MCTCTForCTC.from_pretrained("speechbrain/mctct-large")

|

| 54 |

+

processor = MCTCTProcessor.from_pretrained("speechbrain/mctct-large")

|

| 55 |

+

|

| 56 |

+

# load dummy dataset and read soundfiles

|

| 57 |

+

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

|

| 58 |

+

|

| 59 |

+

# tokenize

|

| 60 |

+

input_features = processor(ds[0]["audio"]["array"], return_tensors="pt").input_features

|

| 61 |

+

|

| 62 |

+

# retrieve logits

|

| 63 |

+

logits = model(input_features).logits

|

| 64 |

+

|

| 65 |

+

# take argmax and decode

|

| 66 |

+

predicted_ids = torch.argmax(logits, dim=-1)

|

| 67 |

+

transcription = processor.batch_decode(predicted_ids)

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

Results for Common Voice, averaged over all languages:

|

| 71 |

+

|

| 72 |

+

*Character error rate (CER)*:

|

| 73 |

+

|

| 74 |

+

| Valid | Test |

|

| 75 |

+

|-------|------|

|

| 76 |

+

| 21.4 | 23.3 |

|

| 77 |

+

|

added_tokens.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"<s>": 8065, "</s>": 8066}

|

config.json

ADDED

|

@@ -0,0 +1,162 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"MCTCTForAudioFrameClassification"

|

| 4 |

+

],

|

| 5 |

+

"attention_head_dim": 384,

|

| 6 |

+

"attention_probs_dropout_prob": 0.3,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"conv_channels": null,

|

| 9 |

+

"conv_dropout": 0.3,

|

| 10 |

+

"conv_glu_dim": 1,

|

| 11 |

+

"conv_kernel": [

|

| 12 |

+

7

|

| 13 |

+

],

|

| 14 |

+

"conv_stride": [

|

| 15 |

+

3

|

| 16 |

+

],

|

| 17 |

+

"ctc_loss_reduction": "sum",

|

| 18 |

+

"ctc_zero_infinity": false,

|

| 19 |

+

"eos_token_id": 2,

|

| 20 |

+

"hidden_act": "relu",

|

| 21 |

+

"hidden_dropout_prob": 0.3,

|

| 22 |

+

"hidden_size": 1536,

|

| 23 |

+

"id2label": {

|

| 24 |

+

"0": "ab",

|

| 25 |

+

"1": "ar",

|

| 26 |

+

"10": "dv",

|

| 27 |

+

"11": "el",

|

| 28 |

+

"12": "en",

|

| 29 |

+

"13": "eo",

|

| 30 |

+

"14": "es",

|

| 31 |

+

"15": "et",

|

| 32 |

+

"16": "eu",

|

| 33 |

+

"17": "fa",

|

| 34 |

+

"18": "fi",

|

| 35 |

+

"19": "fr",

|

| 36 |

+

"2": "as",

|

| 37 |

+

"20": "fy-NL",

|

| 38 |

+

"21": "ga-IE",

|

| 39 |

+

"22": "hi",

|

| 40 |

+

"23": "hsb",

|

| 41 |

+

"24": "hu",

|

| 42 |

+

"25": "ia",

|

| 43 |

+

"26": "id",

|

| 44 |

+

"27": "it",

|

| 45 |

+

"28": "ja",

|

| 46 |

+

"29": "ka",

|

| 47 |

+

"3": "br",

|

| 48 |

+

"30": "kab",

|

| 49 |

+

"31": "ky",

|

| 50 |

+

"32": "lg",

|

| 51 |

+

"33": "lt",

|

| 52 |

+

"34": "lv",

|

| 53 |

+

"35": "mn",

|

| 54 |

+

"36": "mt",

|

| 55 |

+

"37": "nl",

|

| 56 |

+

"38": "or",

|

| 57 |

+

"39": "pa-IN",

|

| 58 |

+

"4": "ca",

|

| 59 |

+

"40": "pl",

|

| 60 |

+

"41": "pt",

|

| 61 |

+

"42": "rm-sursilv",

|

| 62 |

+

"43": "rm-vallader",

|

| 63 |

+

"44": "ro",

|

| 64 |

+

"45": "ru",

|

| 65 |

+

"46": "rw",

|

| 66 |

+

"47": "sah",

|

| 67 |

+

"48": "sl",

|

| 68 |

+

"49": "sv-SE",

|

| 69 |

+

"5": "cnh",

|

| 70 |

+

"50": "ta",

|

| 71 |

+

"51": "th",

|

| 72 |

+

"52": "tr",

|

| 73 |

+

"53": "tt",

|

| 74 |

+

"54": "uk",

|

| 75 |

+

"55": "vi",

|

| 76 |

+

"56": "vot",

|

| 77 |

+

"57": "zh-CN",

|

| 78 |

+

"58": "zh-HK",

|

| 79 |

+

"59": "zh-TW",

|

| 80 |

+

"6": "cs",

|

| 81 |

+

"7": "cv",

|

| 82 |

+

"8": "cy",

|

| 83 |

+

"9": "de"

|

| 84 |

+

},

|

| 85 |

+

"initializer_range": 0.02,

|

| 86 |

+

"input_channels": 1,

|

| 87 |

+

"input_feat_per_channel": 80,

|

| 88 |

+

"intermediate_size": 6144,

|

| 89 |

+

"label2id": {

|

| 90 |

+

"ab": "0",

|

| 91 |

+

"ar": "1",

|

| 92 |

+

"as": "2",

|

| 93 |

+

"br": "3",

|

| 94 |

+

"ca": "4",

|

| 95 |

+

"cnh": "5",

|

| 96 |

+

"cs": "6",

|

| 97 |

+

"cv": "7",

|

| 98 |

+

"cy": "8",

|

| 99 |

+

"de": "9",

|

| 100 |

+

"dv": "10",

|

| 101 |

+

"el": "11",

|

| 102 |

+

"en": "12",

|

| 103 |

+

"eo": "13",

|

| 104 |

+

"es": "14",

|

| 105 |

+

"et": "15",

|

| 106 |

+

"eu": "16",

|

| 107 |

+

"fa": "17",

|

| 108 |

+

"fi": "18",

|

| 109 |

+

"fr": "19",

|

| 110 |

+

"fy-NL": "20",

|

| 111 |

+

"ga-IE": "21",

|

| 112 |

+

"hi": "22",

|

| 113 |

+

"hsb": "23",

|

| 114 |

+

"hu": "24",

|

| 115 |

+

"ia": "25",

|

| 116 |

+

"id": "26",

|

| 117 |

+

"it": "27",

|

| 118 |

+

"ja": "28",

|

| 119 |

+

"ka": "29",

|

| 120 |

+

"kab": "30",

|

| 121 |

+

"ky": "31",

|

| 122 |

+

"lg": "32",

|

| 123 |

+

"lt": "33",

|

| 124 |

+

"lv": "34",

|

| 125 |

+

"mn": "35",

|

| 126 |

+

"mt": "36",

|

| 127 |

+

"nl": "37",

|

| 128 |

+

"or": "38",

|

| 129 |

+

"pa-IN": "39",

|

| 130 |

+

"pl": "40",

|

| 131 |

+

"pt": "41",

|

| 132 |

+

"rm-sursilv": "42",

|

| 133 |

+

"rm-vallader": "43",

|

| 134 |

+

"ro": "44",

|

| 135 |

+

"ru": "45",

|

| 136 |

+

"rw": "46",

|

| 137 |

+

"sah": "47",

|

| 138 |

+

"sl": "48",

|

| 139 |

+

"sv-SE": "49",

|

| 140 |

+

"ta": "50",

|

| 141 |

+

"th": "51",

|

| 142 |

+

"tr": "52",

|

| 143 |

+

"tt": "53",

|

| 144 |

+

"uk": "54",

|

| 145 |

+

"vi": "55",

|

| 146 |

+

"vot": "56",

|

| 147 |

+

"zh-CN": "57",

|

| 148 |

+

"zh-HK": "58",

|

| 149 |

+

"zh-TW": "59"

|

| 150 |

+

},

|

| 151 |

+

"layer_norm_eps": 1e-05,

|

| 152 |

+

"layerdrop": 0.3,

|

| 153 |

+

"max_position_embeddings": 920,

|

| 154 |

+

"model_type": "mctct",

|

| 155 |

+

"num_attention_heads": 4,

|

| 156 |

+

"num_conv_layers": 1,

|

| 157 |

+

"num_hidden_layers": 36,

|

| 158 |

+

"pad_token_id": 1,

|

| 159 |

+

"torch_dtype": "float32",

|

| 160 |

+

"transformers_version": "4.20.0.dev0",

|

| 161 |

+

"vocab_size": 8065

|

| 162 |

+

}

|

preprocessor_config.json

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"K": 257,

|

| 3 |

+

"do_normalize": true,

|

| 4 |

+

"feature_extractor_type": "MCTCFeatureExtractor",

|

| 5 |

+

"feature_size": 80,

|

| 6 |

+

"frame_signal_scale": 32768.0,

|

| 7 |

+

"hop_length": 10,

|

| 8 |

+

"mel_floor": 1.0,

|

| 9 |

+

"n_fft": 512,

|

| 10 |

+

"normalize_means": true,

|

| 11 |

+

"normalize_vars": true,

|

| 12 |

+

"padding_side": "right",

|

| 13 |

+

"padding_value": 0.0,

|

| 14 |

+

"preemphasis_coeff": 0.97,

|

| 15 |

+

"return_attention_mask": false,

|

| 16 |

+

"sample_size": 400,

|

| 17 |

+

"sample_stride": 160,

|

| 18 |

+

"sampling_rate": 16000,

|

| 19 |

+

"win_function": "hamming_window",

|

| 20 |

+

"win_length": 25

|

| 21 |

+

}

|

pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4ebe460f70052e0f0b8c1039e11b9bb0a9e2c135fb98b8e88562e5a5936d073f

|

| 3 |

+

size 4186831517

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"bos_token": "<s>", "eos_token": "</s>", "unk_token": "<unk>", "pad_token": "<pad>", "additional_special_tokens": [{"content": "<s>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true}, {"content": "</s>", "single_word": false, "lstrip": false, "rstrip": false, "normalized": true}]}

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"unk_token": "<unk>", "bos_token": "<s>", "eos_token": "</s>", "pad_token": "<pad>", "do_lower_case": false, "word_delimiter_token": "|", "replace_word_delimiter_char": " ", "return_attention_mask": false, "do_normalize": true, "special_tokens_map_file": "./mctc-large/special_tokens_map.json", "name_or_path": "./mctc-large", "tokenizer_class": "Wav2Vec2CTCTokenizer"}

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|