Spaces:

Running

on

Zero

Running

on

Zero

Upload 1425 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +25 -0

- custom_nodes/comfyui_controlnet_aux/LICENSE.txt +201 -0

- custom_nodes/comfyui_controlnet_aux/NotoSans-Regular.ttf +3 -0

- custom_nodes/comfyui_controlnet_aux/README.md +252 -0

- custom_nodes/comfyui_controlnet_aux/UPDATES.md +44 -0

- custom_nodes/comfyui_controlnet_aux/__init__.py +214 -0

- custom_nodes/comfyui_controlnet_aux/config.example.yaml +20 -0

- custom_nodes/comfyui_controlnet_aux/dev_interface.py +6 -0

- custom_nodes/comfyui_controlnet_aux/examples/CNAuxBanner.jpg +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll1.jpg +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll2.jpg +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/comfyui-controlnet-aux-logo.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_animal_pose.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_anime_face_segmentor.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_anyline.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_densepose.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_depth_anything.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_depth_anything_v2.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_dsine.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_marigold.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_marigold_flat.jpg +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_metric3d.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_onnx.png +0 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_recolor.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_save_kps.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_teed.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_torchscript.png +3 -0

- custom_nodes/comfyui_controlnet_aux/examples/example_unimatch.png +3 -0

- custom_nodes/comfyui_controlnet_aux/hint_image_enchance.py +233 -0

- custom_nodes/comfyui_controlnet_aux/install.bat +20 -0

- custom_nodes/comfyui_controlnet_aux/log.py +80 -0

- custom_nodes/comfyui_controlnet_aux/lvminthin.py +87 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/anime_face_segment.py +43 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/anyline.py +87 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/binary.py +29 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/canny.py +30 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/color.py +26 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/densepose.py +31 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/depth_anything.py +55 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/depth_anything_v2.py +56 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/diffusion_edge.py +41 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/dsine.py +31 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/dwpose.py +162 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/hed.py +53 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/inpaint.py +32 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/leres.py +32 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/lineart.py +30 -0

- custom_nodes/comfyui_controlnet_aux/node_wrappers/lineart_anime.py +27 -0

.gitattributes

CHANGED

|

@@ -42,3 +42,28 @@ custom_nodes/ComfyQR/example_generations/unscannable_00001_fixed_.png filter=lfs

|

|

| 42 |

custom_nodes/ComfyQR/img/for_deletion/badgers_levels_adjusted.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

custom_nodes/ComfyQR/img/for_deletion/badgers.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

custom_nodes/ComfyQR/img/node-mask-qr-errors.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

custom_nodes/ComfyQR/img/for_deletion/badgers_levels_adjusted.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

custom_nodes/ComfyQR/img/for_deletion/badgers.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

custom_nodes/ComfyQR/img/node-mask-qr-errors.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

custom_nodes/comfyui_controlnet_aux/examples/CNAuxBanner.jpg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

custom_nodes/comfyui_controlnet_aux/examples/comfyui-controlnet-aux-logo.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_animal_pose.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_anime_face_segmentor.png filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_anyline.png filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_densepose.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_depth_anything_v2.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_depth_anything.png filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_dsine.png filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_marigold_flat.jpg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_marigold.png filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_metric3d.png filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_recolor.png filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_save_kps.png filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_teed.png filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_torchscript.png filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

custom_nodes/comfyui_controlnet_aux/examples/example_unimatch.png filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll.png filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

custom_nodes/comfyui_controlnet_aux/examples/ExecuteAll2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

custom_nodes/comfyui_controlnet_aux/NotoSans-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

custom_nodes/comfyui_controlnet_aux/src/custom_controlnet_aux/mesh_graphormer/hand_landmarker.task filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

custom_nodes/comfyui_controlnet_aux/src/custom_controlnet_aux/tests/test_image.png filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

custom_nodes/comfyui_controlnet_aux/tests/pose.png filter=lfs diff=lfs merge=lfs -text

|

custom_nodes/comfyui_controlnet_aux/LICENSE.txt

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

custom_nodes/comfyui_controlnet_aux/NotoSans-Regular.ttf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6b04c8dd65af6b73eb4279472ed1580b29102d6496a377340e80a40cdb3b22c9

|

| 3 |

+

size 455188

|

custom_nodes/comfyui_controlnet_aux/README.md

ADDED

|

@@ -0,0 +1,252 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ComfyUI's ControlNet Auxiliary Preprocessors

|

| 2 |

+

Plug-and-play [ComfyUI](https://github.com/comfyanonymous/ComfyUI) node sets for making [ControlNet](https://github.com/lllyasviel/ControlNet/) hint images

|

| 3 |

+

|

| 4 |

+

"anime style, a protest in the street, cyberpunk city, a woman with pink hair and golden eyes (looking at the viewer) is holding a sign with the text "ComfyUI ControlNet Aux" in bold, neon pink" on Flux.1 Dev

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

The code is copy-pasted from the respective folders in https://github.com/lllyasviel/ControlNet/tree/main/annotator and connected to [the 🤗 Hub](https://huggingface.co/lllyasviel/Annotators).

|

| 9 |

+

|

| 10 |

+

All credit & copyright goes to https://github.com/lllyasviel.

|

| 11 |

+

|

| 12 |

+

# Updates

|

| 13 |

+

Go to [Update page](./UPDATES.md) to follow updates

|

| 14 |

+

|

| 15 |

+

# Installation:

|

| 16 |

+

## Using ComfyUI Manager (recommended):

|

| 17 |

+

Install [ComfyUI Manager](https://github.com/ltdrdata/ComfyUI-Manager) and do steps introduced there to install this repo.

|

| 18 |

+

|

| 19 |

+

## Alternative:

|

| 20 |

+

If you're running on Linux, or non-admin account on windows you'll want to ensure `/ComfyUI/custom_nodes` and `comfyui_controlnet_aux` has write permissions.

|

| 21 |

+

|

| 22 |

+

There is now a **install.bat** you can run to install to portable if detected. Otherwise it will default to system and assume you followed ConfyUI's manual installation steps.

|

| 23 |

+

|

| 24 |

+

If you can't run **install.bat** (e.g. you are a Linux user). Open the CMD/Shell and do the following:

|

| 25 |

+

- Navigate to your `/ComfyUI/custom_nodes/` folder

|

| 26 |

+

- Run `git clone https://github.com/Fannovel16/comfyui_controlnet_aux/`

|

| 27 |

+

- Navigate to your `comfyui_controlnet_aux` folder

|

| 28 |

+

- Portable/venv:

|

| 29 |

+

- Run `path/to/ComfUI/python_embeded/python.exe -s -m pip install -r requirements.txt`

|

| 30 |

+

- With system python

|

| 31 |

+

- Run `pip install -r requirements.txt`

|

| 32 |

+

- Start ComfyUI

|

| 33 |

+

|

| 34 |

+

# Nodes

|

| 35 |

+

Please note that this repo only supports preprocessors making hint images (e.g. stickman, canny edge, etc).

|

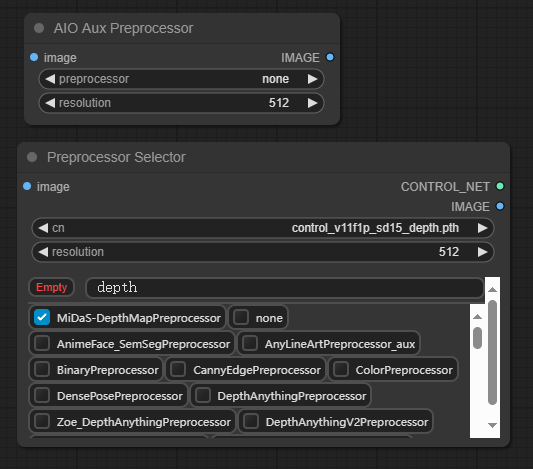

| 36 |

+

All preprocessors except Inpaint are intergrated into `AIO Aux Preprocessor` node.

|

| 37 |

+

This node allow you to quickly get the preprocessor but a preprocessor's own threshold parameters won't be able to set.

|

| 38 |

+

You need to use its node directly to set thresholds.

|

| 39 |

+

|

| 40 |

+

# Nodes (sections are categories in Comfy menu)

|

| 41 |

+

## Line Extractors

|

| 42 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 43 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 44 |

+

| Binary Lines | binary | control_scribble |

|

| 45 |

+

| Canny Edge | canny | control_v11p_sd15_canny <br> control_canny <br> t2iadapter_canny |

|

| 46 |

+

| HED Soft-Edge Lines | hed | control_v11p_sd15_softedge <br> control_hed |

|

| 47 |

+

| Standard Lineart | standard_lineart | control_v11p_sd15_lineart |

|

| 48 |

+

| Realistic Lineart | lineart (or `lineart_coarse` if `coarse` is enabled) | control_v11p_sd15_lineart |

|

| 49 |

+

| Anime Lineart | lineart_anime | control_v11p_sd15s2_lineart_anime |

|

| 50 |

+

| Manga Lineart | lineart_anime_denoise | control_v11p_sd15s2_lineart_anime |

|

| 51 |

+

| M-LSD Lines | mlsd | control_v11p_sd15_mlsd <br> control_mlsd |

|

| 52 |

+

| PiDiNet Soft-Edge Lines | pidinet | control_v11p_sd15_softedge <br> control_scribble |

|

| 53 |

+

| Scribble Lines | scribble | control_v11p_sd15_scribble <br> control_scribble |

|

| 54 |

+

| Scribble XDoG Lines | scribble_xdog | control_v11p_sd15_scribble <br> control_scribble |

|

| 55 |

+

| Fake Scribble Lines | scribble_hed | control_v11p_sd15_scribble <br> control_scribble |

|

| 56 |

+

| TEED Soft-Edge Lines | teed | [controlnet-sd-xl-1.0-softedge-dexined](https://huggingface.co/SargeZT/controlnet-sd-xl-1.0-softedge-dexined/blob/main/controlnet-sd-xl-1.0-softedge-dexined.safetensors) <br> control_v11p_sd15_softedge (Theoretically)

|

| 57 |

+

| Scribble PiDiNet Lines | scribble_pidinet | control_v11p_sd15_scribble <br> control_scribble |

|

| 58 |

+

| AnyLine Lineart | | mistoLine_fp16.safetensors <br> mistoLine_rank256 <br> control_v11p_sd15s2_lineart_anime <br> control_v11p_sd15_lineart |

|

| 59 |

+

|

| 60 |

+

## Normal and Depth Estimators

|

| 61 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 62 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 63 |

+

| MiDaS Depth Map | (normal) depth | control_v11f1p_sd15_depth <br> control_depth <br> t2iadapter_depth |

|

| 64 |

+

| LeReS Depth Map | depth_leres | control_v11f1p_sd15_depth <br> control_depth <br> t2iadapter_depth |

|

| 65 |

+

| Zoe Depth Map | depth_zoe | control_v11f1p_sd15_depth <br> control_depth <br> t2iadapter_depth |

|

| 66 |

+

| MiDaS Normal Map | normal_map | control_normal |

|

| 67 |

+

| BAE Normal Map | normal_bae | control_v11p_sd15_normalbae |

|

| 68 |

+

| MeshGraphormer Hand Refiner ([HandRefinder](https://github.com/wenquanlu/HandRefiner)) | depth_hand_refiner | [control_sd15_inpaint_depth_hand_fp16](https://huggingface.co/hr16/ControlNet-HandRefiner-pruned/blob/main/control_sd15_inpaint_depth_hand_fp16.safetensors) |

|

| 69 |

+

| Depth Anything | depth_anything | [Depth-Anything](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints_controlnet/diffusion_pytorch_model.safetensors) |

|

| 70 |

+

| Zoe Depth Anything <br> (Basically Zoe but the encoder is replaced with DepthAnything) | depth_anything | [Depth-Anything](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints_controlnet/diffusion_pytorch_model.safetensors) |

|

| 71 |

+

| Normal DSINE | | control_normal/control_v11p_sd15_normalbae |

|

| 72 |

+

| Metric3D Depth | | control_v11f1p_sd15_depth <br> control_depth <br> t2iadapter_depth |

|

| 73 |

+

| Metric3D Normal | | control_v11p_sd15_normalbae |

|

| 74 |

+

| Depth Anything V2 | | [Depth-Anything](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints_controlnet/diffusion_pytorch_model.safetensors) |

|

| 75 |

+

|

| 76 |

+

## Faces and Poses Estimators

|

| 77 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 78 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 79 |

+

| DWPose Estimator | dw_openpose_full | control_v11p_sd15_openpose <br> control_openpose <br> t2iadapter_openpose |

|

| 80 |

+

| OpenPose Estimator | openpose (detect_body) <br> openpose_hand (detect_body + detect_hand) <br> openpose_faceonly (detect_face) <br> openpose_full (detect_hand + detect_body + detect_face) | control_v11p_sd15_openpose <br> control_openpose <br> t2iadapter_openpose |

|

| 81 |

+

| MediaPipe Face Mesh | mediapipe_face | controlnet_sd21_laion_face_v2 |

|

| 82 |

+

| Animal Estimator | animal_openpose | [control_sd15_animal_openpose_fp16](https://huggingface.co/huchenlei/animal_openpose/blob/main/control_sd15_animal_openpose_fp16.pth) |

|

| 83 |

+

|

| 84 |

+

## Optical Flow Estimators

|

| 85 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 86 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 87 |

+

| Unimatch Optical Flow | | [DragNUWA](https://github.com/ProjectNUWA/DragNUWA) |

|

| 88 |

+

|

| 89 |

+

### How to get OpenPose-format JSON?

|

| 90 |

+

#### User-side

|

| 91 |

+

This workflow will save images to ComfyUI's output folder (the same location as output images). If you haven't found `Save Pose Keypoints` node, update this extension

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

#### Dev-side

|

| 95 |

+

An array of [OpenPose-format JSON](https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/02_output.md#json-output-format) corresponsding to each frame in an IMAGE batch can be gotten from DWPose and OpenPose using `app.nodeOutputs` on the UI or `/history` API endpoint. JSON output from AnimalPose uses a kinda similar format to OpenPose JSON:

|

| 96 |

+

```

|

| 97 |

+

[

|

| 98 |

+

{

|

| 99 |

+

"version": "ap10k",

|

| 100 |

+

"animals": [

|

| 101 |

+

[[x1, y1, 1], [x2, y2, 1],..., [x17, y17, 1]],

|

| 102 |

+

[[x1, y1, 1], [x2, y2, 1],..., [x17, y17, 1]],

|

| 103 |

+

...

|

| 104 |

+

],

|

| 105 |

+

"canvas_height": 512,

|

| 106 |

+

"canvas_width": 768

|

| 107 |

+

},

|

| 108 |

+

...

|

| 109 |

+

]

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

For extension developers (e.g. Openpose editor):

|

| 113 |

+

```js

|

| 114 |

+

const poseNodes = app.graph._nodes.filter(node => ["OpenposePreprocessor", "DWPreprocessor", "AnimalPosePreprocessor"].includes(node.type))

|

| 115 |

+

for (const poseNode of poseNodes) {

|

| 116 |

+

const openposeResults = JSON.parse(app.nodeOutputs[poseNode.id].openpose_json[0])

|

| 117 |

+

console.log(openposeResults) //An array containing Openpose JSON for each frame

|

| 118 |

+

}

|

| 119 |

+

```

|

| 120 |

+

|

| 121 |

+

For API users:

|

| 122 |

+

Javascript

|

| 123 |

+

```js

|

| 124 |

+

import fetch from "node-fetch" //Remember to add "type": "module" to "package.json"

|

| 125 |

+

async function main() {

|

| 126 |

+

const promptId = '792c1905-ecfe-41f4-8114-83e6a4a09a9f' //Too lazy to POST /queue

|

| 127 |

+

let history = await fetch(`http://127.0.0.1:8188/history/${promptId}`).then(re => re.json())

|

| 128 |

+

history = history[promptId]

|

| 129 |

+

const nodeOutputs = Object.values(history.outputs).filter(output => output.openpose_json)

|

| 130 |

+

for (const nodeOutput of nodeOutputs) {

|

| 131 |

+

const openposeResults = JSON.parse(nodeOutput.openpose_json[0])

|

| 132 |

+

console.log(openposeResults) //An array containing Openpose JSON for each frame

|

| 133 |

+

}

|

| 134 |

+

}

|

| 135 |

+

main()

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

Python

|

| 139 |

+

```py

|

| 140 |

+

import json, urllib.request

|

| 141 |

+

|

| 142 |

+

server_address = "127.0.0.1:8188"

|

| 143 |

+

prompt_id = '' #Too lazy to POST /queue

|

| 144 |

+

|

| 145 |

+

def get_history(prompt_id):

|

| 146 |

+

with urllib.request.urlopen("http://{}/history/{}".format(server_address, prompt_id)) as response:

|

| 147 |

+

return json.loads(response.read())

|

| 148 |

+

|

| 149 |

+

history = get_history(prompt_id)[prompt_id]

|

| 150 |

+

for o in history['outputs']:

|

| 151 |

+

for node_id in history['outputs']:

|

| 152 |

+

node_output = history['outputs'][node_id]

|

| 153 |

+

if 'openpose_json' in node_output:

|

| 154 |

+

print(json.loads(node_output['openpose_json'][0])) #An list containing Openpose JSON for each frame

|

| 155 |

+

```

|

| 156 |

+

## Semantic Segmentation

|

| 157 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 158 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 159 |

+

| OneFormer ADE20K Segmentor | oneformer_ade20k | control_v11p_sd15_seg |

|

| 160 |

+

| OneFormer COCO Segmentor | oneformer_coco | control_v11p_sd15_seg |

|

| 161 |

+

| UniFormer Segmentor | segmentation |control_sd15_seg <br> control_v11p_sd15_seg|

|

| 162 |

+

|

| 163 |

+

## T2IAdapter-only

|

| 164 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 165 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 166 |

+

| Color Pallete | color | t2iadapter_color |

|

| 167 |

+

| Content Shuffle | shuffle | t2iadapter_style |

|

| 168 |

+

|

| 169 |

+

## Recolor

|

| 170 |

+

| Preprocessor Node | sd-webui-controlnet/other | ControlNet/T2I-Adapter |

|

| 171 |

+

|-----------------------------|---------------------------|-------------------------------------------|

|

| 172 |

+

| Image Luminance | recolor_luminance | [ioclab_sd15_recolor](https://huggingface.co/lllyasviel/sd_control_collection/resolve/main/ioclab_sd15_recolor.safetensors) <br> [sai_xl_recolor_256lora](https://huggingface.co/lllyasviel/sd_control_collection/resolve/main/sai_xl_recolor_256lora.safetensors) <br> [bdsqlsz_controlllite_xl_recolor_luminance](https://huggingface.co/bdsqlsz/qinglong_controlnet-lllite/resolve/main/bdsqlsz_controlllite_xl_recolor_luminance.safetensors) |

|

| 173 |

+

| Image Intensity | recolor_intensity | Idk. Maybe same as above? |

|

| 174 |

+

|

| 175 |

+

# Examples

|

| 176 |

+

> A picture is worth a thousand words

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

# Testing workflow

|

| 182 |

+

https://github.com/Fannovel16/comfyui_controlnet_aux/blob/main/examples/ExecuteAll.png

|

| 183 |

+

Input image: https://github.com/Fannovel16/comfyui_controlnet_aux/blob/main/examples/comfyui-controlnet-aux-logo.png

|

| 184 |

+

|

| 185 |

+

# Q&A:

|

| 186 |

+

## Why some nodes doesn't appear after I installed this repo?

|

| 187 |

+

|

| 188 |

+

This repo has a new mechanism which will skip any custom node can't be imported. If you meet this case, please create a issue on [Issues tab](https://github.com/Fannovel16/comfyui_controlnet_aux/issues) with the log from the command line.

|

| 189 |

+

|

| 190 |

+

## DWPose/AnimalPose only uses CPU so it's so slow. How can I make it use GPU?

|

| 191 |

+

There are two ways to speed-up DWPose: using TorchScript checkpoints (.torchscript.pt) checkpoints or ONNXRuntime (.onnx). TorchScript way is little bit slower than ONNXRuntime but doesn't require any additional library and still way way faster than CPU.

|

| 192 |

+

|

| 193 |

+

A torchscript bbox detector is compatiable with an onnx pose estimator and vice versa.

|

| 194 |

+

### TorchScript

|

| 195 |

+

Set `bbox_detector` and `pose_estimator` according to this picture. You can try other bbox detector endings with `.torchscript.pt` to reduce bbox detection time if input images are ideal.

|

| 196 |

+

|

| 197 |

+

### ONNXRuntime

|

| 198 |

+

If onnxruntime is installed successfully and the checkpoint used endings with `.onnx`, it will replace default cv2 backend to take advantage of GPU. Note that if you are using NVidia card, this method currently can only works on CUDA 11.8 (ComfyUI_windows_portable_nvidia_cu118_or_cpu.7z) unless you compile onnxruntime yourself.

|

| 199 |

+

|

| 200 |

+

1. Know your onnxruntime build:

|

| 201 |

+

* * NVidia CUDA 11.x or bellow/AMD GPU: `onnxruntime-gpu`

|

| 202 |

+

* * NVidia CUDA 12.x: `onnxruntime-gpu --extra-index-url https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple/`

|

| 203 |

+

* * DirectML: `onnxruntime-directml`

|

| 204 |

+

* * OpenVINO: `onnxruntime-openvino`

|

| 205 |

+

|

| 206 |

+

Note that if this is your first time using ComfyUI, please test if it can run on your device before doing next steps.

|

| 207 |

+

|

| 208 |

+

2. Add it into `requirements.txt`

|

| 209 |

+

|

| 210 |

+

3. Run `install.bat` or pip command mentioned in Installation

|

| 211 |

+

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

# Assets files of preprocessors

|

| 215 |

+

* anime_face_segment: [bdsqlsz/qinglong_controlnet-lllite/Annotators/UNet.pth](https://huggingface.co/bdsqlsz/qinglong_controlnet-lllite/blob/main/Annotators/UNet.pth), [anime-seg/isnetis.ckpt](https://huggingface.co/skytnt/anime-seg/blob/main/isnetis.ckpt)

|

| 216 |

+

* densepose: [LayerNorm/DensePose-TorchScript-with-hint-image/densepose_r50_fpn_dl.torchscript](https://huggingface.co/LayerNorm/DensePose-TorchScript-with-hint-image/blob/main/densepose_r50_fpn_dl.torchscript)

|

| 217 |

+

* dwpose:

|

| 218 |

+

* * bbox_detector: Either [yzd-v/DWPose/yolox_l.onnx](https://huggingface.co/yzd-v/DWPose/blob/main/yolox_l.onnx), [hr16/yolox-onnx/yolox_l.torchscript.pt](https://huggingface.co/hr16/yolox-onnx/blob/main/yolox_l.torchscript.pt), [hr16/yolo-nas-fp16/yolo_nas_l_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_l_fp16.onnx), [hr16/yolo-nas-fp16/yolo_nas_m_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_m_fp16.onnx), [hr16/yolo-nas-fp16/yolo_nas_s_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_s_fp16.onnx)

|

| 219 |

+

* * pose_estimator: Either [hr16/DWPose-TorchScript-BatchSize5/dw-ll_ucoco_384_bs5.torchscript.pt](https://huggingface.co/hr16/DWPose-TorchScript-BatchSize5/blob/main/dw-ll_ucoco_384_bs5.torchscript.pt), [yzd-v/DWPose/dw-ll_ucoco_384.onnx](https://huggingface.co/yzd-v/DWPose/blob/main/dw-ll_ucoco_384.onnx)

|

| 220 |

+

* animal_pose (ap10k):

|

| 221 |

+

* * bbox_detector: Either [yzd-v/DWPose/yolox_l.onnx](https://huggingface.co/yzd-v/DWPose/blob/main/yolox_l.onnx), [hr16/yolox-onnx/yolox_l.torchscript.pt](https://huggingface.co/hr16/yolox-onnx/blob/main/yolox_l.torchscript.pt), [hr16/yolo-nas-fp16/yolo_nas_l_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_l_fp16.onnx), [hr16/yolo-nas-fp16/yolo_nas_m_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_m_fp16.onnx), [hr16/yolo-nas-fp16/yolo_nas_s_fp16.onnx](https://huggingface.co/hr16/yolo-nas-fp16/blob/main/yolo_nas_s_fp16.onnx)

|

| 222 |

+

* * pose_estimator: Either [hr16/DWPose-TorchScript-BatchSize5/rtmpose-m_ap10k_256_bs5.torchscript.pt](https://huggingface.co/hr16/DWPose-TorchScript-BatchSize5/blob/main/rtmpose-m_ap10k_256_bs5.torchscript.pt), [hr16/UnJIT-DWPose/rtmpose-m_ap10k_256.onnx](https://huggingface.co/hr16/UnJIT-DWPose/blob/main/rtmpose-m_ap10k_256.onnx)

|

| 223 |

+

* hed: [lllyasviel/Annotators/ControlNetHED.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/ControlNetHED.pth)

|

| 224 |

+

* leres: [lllyasviel/Annotators/res101.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/res101.pth), [lllyasviel/Annotators/latest_net_G.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/latest_net_G.pth)

|

| 225 |

+

* lineart: [lllyasviel/Annotators/sk_model.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/sk_model.pth), [lllyasviel/Annotators/sk_model2.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/sk_model2.pth)

|

| 226 |

+

* lineart_anime: [lllyasviel/Annotators/netG.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/netG.pth)

|

| 227 |

+

* manga_line: [lllyasviel/Annotators/erika.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/erika.pth)

|

| 228 |

+

* mesh_graphormer: [hr16/ControlNet-HandRefiner-pruned/graphormer_hand_state_dict.bin](https://huggingface.co/hr16/ControlNet-HandRefiner-pruned/blob/main/graphormer_hand_state_dict.bin), [hr16/ControlNet-HandRefiner-pruned/hrnetv2_w64_imagenet_pretrained.pth](https://huggingface.co/hr16/ControlNet-HandRefiner-pruned/blob/main/hrnetv2_w64_imagenet_pretrained.pth)

|

| 229 |

+

* midas: [lllyasviel/Annotators/dpt_hybrid-midas-501f0c75.pt](https://huggingface.co/lllyasviel/Annotators/blob/main/dpt_hybrid-midas-501f0c75.pt)

|

| 230 |

+

* mlsd: [lllyasviel/Annotators/mlsd_large_512_fp32.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/mlsd_large_512_fp32.pth)

|

| 231 |

+

* normalbae: [lllyasviel/Annotators/scannet.pt](https://huggingface.co/lllyasviel/Annotators/blob/main/scannet.pt)

|

| 232 |

+

* oneformer: [lllyasviel/Annotators/250_16_swin_l_oneformer_ade20k_160k.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/250_16_swin_l_oneformer_ade20k_160k.pth)

|

| 233 |

+

* open_pose: [lllyasviel/Annotators/body_pose_model.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/body_pose_model.pth), [lllyasviel/Annotators/hand_pose_model.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/hand_pose_model.pth), [lllyasviel/Annotators/facenet.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/facenet.pth)

|

| 234 |

+

* pidi: [lllyasviel/Annotators/table5_pidinet.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/table5_pidinet.pth)

|

| 235 |

+

* sam: [dhkim2810/MobileSAM/mobile_sam.pt](https://huggingface.co/dhkim2810/MobileSAM/blob/main/mobile_sam.pt)

|

| 236 |

+

* uniformer: [lllyasviel/Annotators/upernet_global_small.pth](https://huggingface.co/lllyasviel/Annotators/blob/main/upernet_global_small.pth)

|

| 237 |

+

* zoe: [lllyasviel/Annotators/ZoeD_M12_N.pt](https://huggingface.co/lllyasviel/Annotators/blob/main/ZoeD_M12_N.pt)

|

| 238 |

+

* teed: [bdsqlsz/qinglong_controlnet-lllite/7_model.pth](https://huggingface.co/bdsqlsz/qinglong_controlnet-lllite/blob/main/Annotators/7_model.pth)

|

| 239 |

+

* depth_anything: Either [LiheYoung/Depth-Anything/checkpoints/depth_anything_vitl14.pth](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints/depth_anything_vitl14.pth), [LiheYoung/Depth-Anything/checkpoints/depth_anything_vitb14.pth](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints/depth_anything_vitb14.pth) or [LiheYoung/Depth-Anything/checkpoints/depth_anything_vits14.pth](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints/depth_anything_vits14.pth)

|

| 240 |

+

* diffusion_edge: Either [hr16/Diffusion-Edge/diffusion_edge_indoor.pt](https://huggingface.co/hr16/Diffusion-Edge/blob/main/diffusion_edge_indoor.pt), [hr16/Diffusion-Edge/diffusion_edge_urban.pt](https://huggingface.co/hr16/Diffusion-Edge/blob/main/diffusion_edge_urban.pt) or [hr16/Diffusion-Edge/diffusion_edge_natrual.pt](https://huggingface.co/hr16/Diffusion-Edge/blob/main/diffusion_edge_natrual.pt)

|

| 241 |

+

* unimatch: Either [hr16/Unimatch/gmflow-scale2-regrefine6-mixdata.pth](https://huggingface.co/hr16/Unimatch/blob/main/gmflow-scale2-regrefine6-mixdata.pth), [hr16/Unimatch/gmflow-scale2-mixdata.pth](https://huggingface.co/hr16/Unimatch/blob/main/gmflow-scale2-mixdata.pth) or [hr16/Unimatch/gmflow-scale1-mixdata.pth](https://huggingface.co/hr16/Unimatch/blob/main/gmflow-scale1-mixdata.pth)

|

| 242 |

+

* zoe_depth_anything: Either [LiheYoung/Depth-Anything/checkpoints_metric_depth/depth_anything_metric_depth_indoor.pt](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints_metric_depth/depth_anything_metric_depth_indoor.pt) or [LiheYoung/Depth-Anything/checkpoints_metric_depth/depth_anything_metric_depth_outdoor.pt](https://huggingface.co/spaces/LiheYoung/Depth-Anything/blob/main/checkpoints_metric_depth/depth_anything_metric_depth_outdoor.pt)

|

| 243 |

+

# 2000 Stars 😄

|

| 244 |

+

<a href="https://star-history.com/#Fannovel16/comfyui_controlnet_aux&Date">

|

| 245 |

+

<picture>

|

| 246 |

+

<source media="(prefers-color-scheme: dark)" srcset="https://api.star-history.com/svg?repos=Fannovel16/comfyui_controlnet_aux&type=Date&theme=dark" />

|

| 247 |

+

<source media="(prefers-color-scheme: light)" srcset="https://api.star-history.com/svg?repos=Fannovel16/comfyui_controlnet_aux&type=Date" />

|

| 248 |

+

<img alt="Star History Chart" src="https://api.star-history.com/svg?repos=Fannovel16/comfyui_controlnet_aux&type=Date" />

|

| 249 |

+

</picture>

|

| 250 |

+

</a>

|

| 251 |

+

|

| 252 |

+

Thanks for yalls supports. I never thought the graph for stars would be linear lol.

|

custom_nodes/comfyui_controlnet_aux/UPDATES.md

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

* `AIO Aux Preprocessor` intergrating all loadable aux preprocessors as dropdown options. Easy to copy, paste and get the preprocessor faster.

|

| 2 |

+

* Added OpenPose-format JSON output from OpenPose Preprocessor and DWPose Preprocessor. Checks [here](#faces-and-poses).

|

| 3 |

+

* Fixed wrong model path when downloading DWPose.

|

| 4 |

+

* Make hint images less blurry.

|

| 5 |

+

* Added `resolution` option, `PixelPerfectResolution` and `HintImageEnchance` nodes (TODO: Documentation).

|

| 6 |

+

* Added `RAFT Optical Flow Embedder` for TemporalNet2 (TODO: Workflow example).

|

| 7 |

+

* Fixed opencv's conflicts between this extension, [ReActor](https://github.com/Gourieff/comfyui-reactor-node) and Roop. Thanks `Gourieff` for [the solution](https://github.com/Fannovel16/comfyui_controlnet_aux/issues/7#issuecomment-1734319075)!

|

| 8 |

+

* RAFT is removed as the code behind it doesn't match what what the original code does

|

| 9 |

+

* Changed `lineart`'s display name from `Normal Lineart` to `Realistic Lineart`. This change won't affect old workflows

|

| 10 |

+

* Added support for `onnxruntime` to speed-up DWPose (see the Q&A)

|

| 11 |

+

* Fixed TypeError: expected size to be one of int or Tuple[int] or Tuple[int, int] or Tuple[int, int, int], but got size with types [<class 'numpy.int64'>, <class 'numpy.int64'>]: [Issue](https://github.com/Fannovel16/comfyui_controlnet_aux/issues/2), [PR](https://github.com/Fannovel16/comfyui_controlnet_aux/pull/71))

|

| 12 |

+

* Fixed ImageGenResolutionFromImage mishape (https://github.com/Fannovel16/comfyui_controlnet_aux/pull/74)

|

| 13 |

+

* Fixed LeRes and MiDaS's incomatipility with MPS device

|

| 14 |

+

* Fixed checking DWPose onnxruntime session multiple times: https://github.com/Fannovel16/comfyui_controlnet_aux/issues/89)

|

| 15 |

+

* Added `Anime Face Segmentor` (in `ControlNet Preprocessors/Semantic Segmentation`) for [ControlNet AnimeFaceSegmentV2](https://huggingface.co/bdsqlsz/qinglong_controlnet-lllite#animefacesegmentv2). Checks [here](#anime-face-segmentor)

|

| 16 |

+

* Change download functions and fix [download error](https://github.com/Fannovel16/comfyui_controlnet_aux/issues/39): [PR](https://github.com/Fannovel16/comfyui_controlnet_aux/pull/96)

|

| 17 |

+

* Caching DWPose Onnxruntime during the first use of DWPose node instead of ComfyUI startup

|

| 18 |

+

* Added alternative YOLOX models for faster speed when using DWPose

|

| 19 |

+

* Added alternative DWPose models

|

| 20 |

+

* Implemented the preprocessor for [AnimalPose ControlNet](https://github.com/abehonest/ControlNet_AnimalPose/tree/main). Check [Animal Pose AP-10K](#animal-pose-ap-10k)

|

| 21 |

+

* Added YOLO-NAS models which are drop-in replacements of YOLOX

|

| 22 |

+

* Fixed Openpose Face/Hands no longer detecting: https://github.com/Fannovel16/comfyui_controlnet_aux/issues/54

|

| 23 |

+

* Added TorchScript implementation of DWPose and AnimalPose

|

| 24 |

+

* Added TorchScript implementation of DensePose from [Colab notebook](https://colab.research.google.com/drive/16hcaaKs210ivpxjoyGNuvEXZD4eqOOSQ) which doesn't require detectron2. [Example](#densepose). Thanks [@LayerNome](https://github.com/Layer-norm) for fixing bugs related.

|

| 25 |

+

* Added Standard Lineart Preprocessor

|

| 26 |

+

* Fixed OpenPose misplacements in some cases

|

| 27 |

+

* Added Mesh Graphormer - Hand Depth Map & Mask

|

| 28 |

+

* Misaligned hands bug from MeshGraphormer was fixed

|

| 29 |

+

* Added more mask options for MeshGraphormer

|

| 30 |

+

* Added Save Pose Keypoint node for editing

|

| 31 |

+

* Added Unimatch Optical Flow

|

| 32 |

+

* Added Depth Anything & Zoe Depth Anything

|

| 33 |

+

* Removed resolution field from Unimatch Optical Flow as that interpolating optical flow seems unstable

|

| 34 |

+

* Added TEED Soft-Edge Preprocessor

|

| 35 |

+

* Added DiffusionEdge

|

| 36 |

+

* Added Image Luminance and Image Intensity

|

| 37 |

+

* Added Normal DSINE

|

| 38 |

+

* Added TTPlanet Tile (09/05/2024, DD/MM/YYYY)

|

| 39 |

+

* Added AnyLine, Metric3D (18/05/2024)

|

| 40 |

+

* Added Depth Anything V2 (16/06/2024)

|

| 41 |

+

* Added Union model of ControlNet and preprocessors

|

| 42 |

+

|

| 43 |

+

* Refactor INPUT_TYPES and add Execute All node during the process of learning [Execution Model Inversion](https://github.com/comfyanonymous/ComfyUI/pull/2666)

|

| 44 |

+

* Added scale_stick_for_xinsr_cn (https://github.com/Fannovel16/comfyui_controlnet_aux/issues/447) (09/04/2024)

|

custom_nodes/comfyui_controlnet_aux/__init__.py

ADDED

|

@@ -0,0 +1,214 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|