Improve model card: Add pipeline tag, library, links, usage & citation

#1

by

nielsr

HF Staff

- opened

README.md

CHANGED

|

@@ -1,3 +1,72 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: cc-by-nc-4.0

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: cc-by-nc-4.0

|

| 3 |

+

pipeline_tag: image-to-image

|

| 4 |

+

library_name: pytorch

|

| 5 |

+

---

|

| 6 |

+

|

| 7 |

+

# Flow Poke Transformer (FPT)

|

| 8 |

+

|

| 9 |

+

[](https://compvis.github.io/flow-poke-transformer/)

|

| 10 |

+

[](https://huggingface.co/papers/2510.12777)

|

| 11 |

+

[](https://github.com/CompVis/flow-poke-transformer)

|

| 12 |

+

[](https://huggingface.co/CompVis/flow-poke-transformer)

|

| 13 |

+

|

| 14 |

+

## Paper and Abstract

|

| 15 |

+

|

| 16 |

+

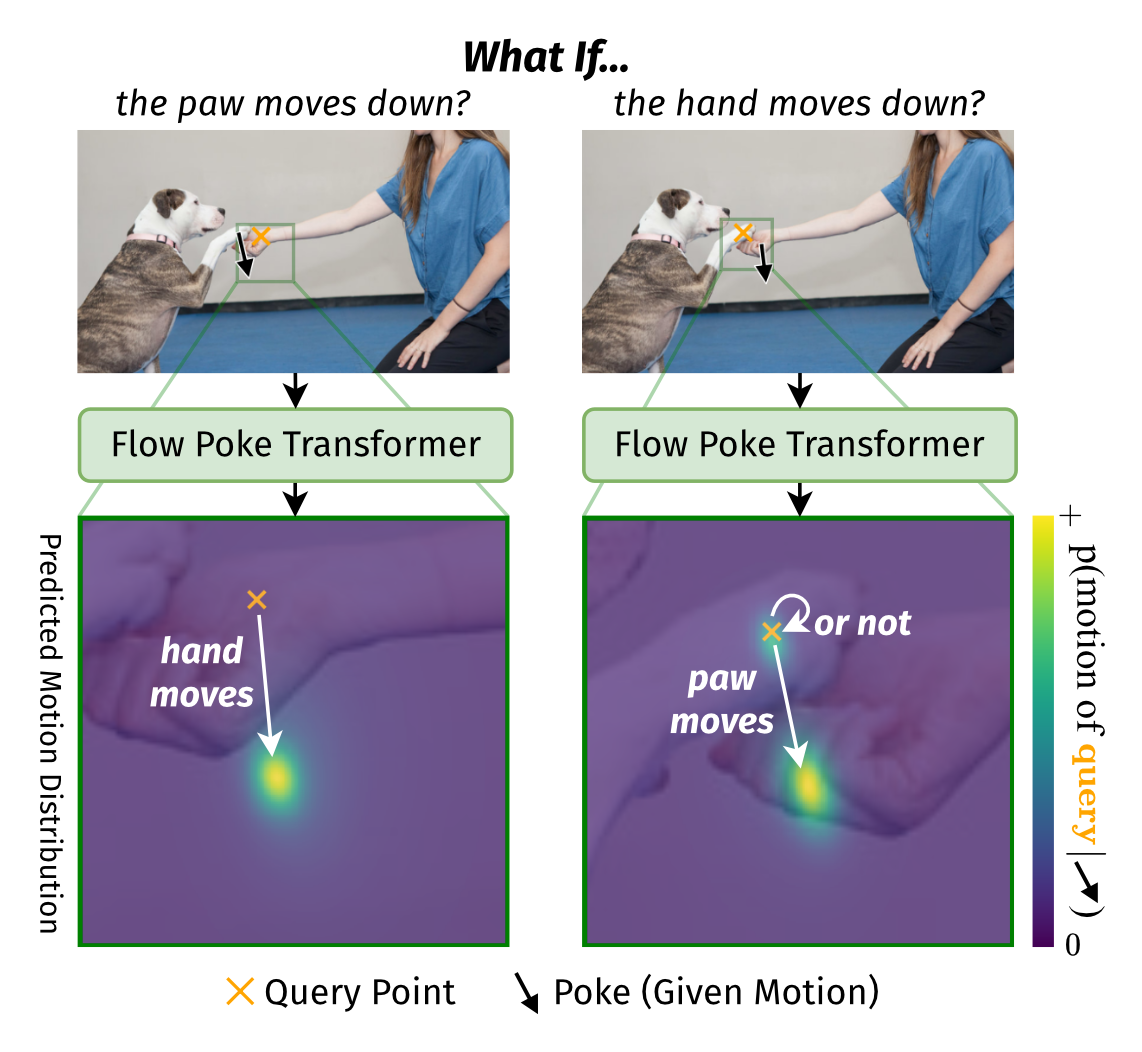

The Flow Poke Transformer (FPT) was presented in the paper [What If : Understanding Motion Through Sparse Interactions](https://huggingface.co/papers/2510.12777).

|

| 17 |

+

|

| 18 |

+

FPT is a novel framework for directly predicting the distribution of local motion, conditioned on sparse interactions termed "pokes". Unlike traditional methods that typically only enable dense sampling of a single realization of scene dynamics, FPT provides an interpretable, directly accessible representation of multi-modal scene motion, its dependency on physical interactions, and the inherent uncertainties of scene dynamics. The model has been evaluated on several downstream tasks, demonstrating competitive performance in dense face motion generation, articulated object motion estimation, and moving part segmentation from pokes.

|

| 19 |

+

|

| 20 |

+

## Project Page and Code

|

| 21 |

+

|

| 22 |

+

* **Project Page:** [https://compvis.github.io/flow-poke-transformer/](https://compvis.github.io/flow-poke-transformer/)

|

| 23 |

+

* **GitHub Repository:** [https://github.com/CompVis/flow-poke-transformer](https://github.com/CompVis/flow-poke-transformer)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

_FPT predicts distributions of potential motion for sparse points. Left: the paw pushing the hand down will force the hand downwards, resulting in a unimodal distribution. Right: the hand moving down results in two modes, the paw following along or staying put._

|

| 27 |

+

|

| 28 |

+

## Usage

|

| 29 |

+

|

| 30 |

+

The easiest way to try FPT is via our interactive demo:

|

| 31 |

+

|

| 32 |

+

```shell

|

| 33 |

+

python -m scripts.demo.app --compile True --warmup_compiled_paths True

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

Compilation is optional but recommended for a better user experience. A checkpoint will be downloaded from Hugging Face by default if not explicitly specified via the CLI.

|

| 37 |

+

|

| 38 |

+

For programmatic usage, the simplest way to use FPT is via `torch.hub`:

|

| 39 |

+

|

| 40 |

+

```python

|

| 41 |

+

import torch

|

| 42 |

+

|

| 43 |

+

model = torch.hub.load("CompVis/flow_poke_transformer", "fpt_base")

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

If you wish to integrate FPT into your own codebase, you can copy `model.py` and `dinov2.py` from the [GitHub repository](https://github.com/CompVis/flow-poke-transformer). The model can then be instantiated as follows:

|

| 47 |

+

|

| 48 |

+

```python

|

| 49 |

+

import torch

|

| 50 |

+

from flow_poke.model import FlowPokeTransformer_Base

|

| 51 |

+

|

| 52 |

+

model: FlowPokeTransformer_Base = FlowPokeTransformer_Base()

|

| 53 |

+

state_dict = torch.load("fpt_base.pt") # You would need to download the weights separately

|

| 54 |

+

model.load_state_dict(state_dict)

|

| 55 |

+

model.requires_grad_(False)

|

| 56 |

+

model.eval()

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

The `FlowPokeTransformer` class contains all necessary methods for various applications. For high-level usage, refer to the `FlowPokeTransformer.predict_*()` methods. For low-level usage, the module's `forward()` can be used.

|

| 60 |

+

|

| 61 |

+

## Citation

|

| 62 |

+

|

| 63 |

+

If you find our model or code useful, please cite our paper:

|

| 64 |

+

|

| 65 |

+

```bibtex

|

| 66 |

+

@inproceedings{baumann2025whatif,

|

| 67 |

+

title={What If: Understanding Motion Through Sparse Interactions},

|

| 68 |

+

author={Stefan Andreas Baumann and Nick Stracke and Timy Phan and Bj{\"o}rn Ommer},

|

| 69 |

+

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

|

| 70 |

+

year={2025}

|

| 71 |

+

}

|

| 72 |

+

```

|